AI shouldn’t be a risk. IronCloud delivers secure, self-hosted LLM infrastructure purpose-built for CUI, ITAR, and FedRAMP High environments.

IronCloud is engineered for the strictest security standards — not adapted after the fact. Our stack is optimized for isolated VPC deployment, supporting ITAR, DFARS/NIST 800-171, and CMMC requirements out of the box. From disk-level encryption to private containerized inference and audit-ready logging, every layer is locked down.

🛡️CUI & ITAR-compliant by design in supported deployments

🛡️Runs fully within your environment — no external calls

🛡️Supports SSP documentation, incident response, and audit trails

🛡️ Integrates with GCC High, AWS GovCloud, and FedRAMP-approved services

Your Data. Your Models. Your Rules.

IronCloud puts you in full control — no phoning home, no vendor lock-in, and no shared inference. Every model, policy, and permission is managed within your own environment.

Private Inference: No External Calls, Ever

IronCloud executes all inference within your infrastructure — whether hosted on AWS GovCloud, Azure GCC High, or deployed in a fully air-gapped environment. There are no IronCloud-managed services, no external callbacks, and no third-party egress. Whether you’re routing queries to containerized models via LocalAI and Ollama, or using scoped calls to AWS Bedrock or Azure OpenAI, all LLM interactions remain inside your trusted boundary. This architecture satisfies ITAR’s closed-system requirements and aligns with FedRAMP High and CMMC Level 2 by enforcing strict boundary controls, minimizing attack surfaces, and guaranteeing data sovereignty.

-

Fully compatible with SCIF, IL5, and enclave deployments

-

Meets ITAR closed system definition and supports zero egress assurance

Bring Your Own Models (BYOM)

IronCloud supports true model independence. You can integrate and switch between Claude (via Bedrock), GPT-4o (via Azure), LLaMA, Mistral, or any other model that fits your mission — including containerized deployments for total isolation. This approach aligns with the Zero Trust principle of reducing reliance on any single vendor or implicit trust path. You maintain policy-level control over which models are available to which users, and changes to model availability or routing can be made in config without requiring redeployment. This flexibility supports evolving CMMC and NIST 800-171 environments where classification level, licensing, and inference context matter.

RBAC + OpenID Access Control

IronCloud enforces strict role- and identity-based access controls through full RBAC integration, backed by OpenID Connect. Whether using Azure AD (including GCC High), Okta, or another identity provider, you can define which users or groups have access to which agents, models, or tools. Model access can be scoped to specific roles or tags — for example, restricting dev teams to non-production models — and all actions are logged with impersonation traceability. These controls map directly to NIST 800-171 access requirements (3.1.1–3.1.5), and support CMMC Level 2 and Zero Trust objectives for identity-aware policy enforcement.

-

Supports user- and role-scoped API access with optional key expiration

-

Compatible with Azure GCC High, Okta, Entra ID, and custom OIDC setups

Audit Logging and Telemetry

IronCloud records every inference event — including the user, model, prompt, and token count — and makes that data available through your own SIEM or log pipeline. You can export logs to CloudWatch, Azure Monitor, or a self-hosted FluentBit stack, and all records retain source identity for traceability. This level of auditability supports NIST 800-92 and 800-171 (3.3.x family), providing clear evidence of system access and data usage. Logs are never anonymized or obfuscated, ensuring accountability across users and workflows, and making IronCloud suitable for CMMC Level 2 and FedRAMP High environments where logging is a core control.

Encrypted Storage and TLS Everywhere

All data stored by IronCloud — including audit logs, chat history, vector embeddings, and configuration state — is encrypted at rest using your platform’s native disk encryption, file-level controls, or KMS integration. All internal and external connections are encrypted via TLS, with support for bring-your-own certificates and mutual TLS (mTLS) for service-to-service authentication. IronCloud can also integrate with vault solutions for secrets management. These controls align with FedRAMP High encryption requirements (SC-12, SC-13, SC-28) and support FIPS 140-3 compliant architectures, making the system suitable for regulated workloads requiring cryptographic assurance.

Multi-Tenant Safe by Default

IronCloud is built to isolate projects, teams, and data at the workspace level. Each workspace can be configured with separate identity mappings, storage boundaries, and model routing policies. This allows different business units, customer programs, or development and production environments to operate within the same system without risk of cross-contamination. Per-tenant quota enforcement, token accounting, and logging make the platform suitable for organizations seeking CMMC L2 and FedRAMP alignment, where data segregation and boundary enforcement are critical to passing audits and minimizing lateral risk.

Zero Trust Architecture Alignment

IronCloud is natively aligned with Zero Trust principles as defined by NIST 800-207 and DoD guidance. Every service and user interaction is authenticated and explicitly authorized — there is no implicit trust between agents, models, or users. Access to models, RAG pipelines, and even internal TTS/STT services is governed by policy, and all components operate on a least-privilege basis. This architecture is designed to support modern federal mandates for Zero Trust adoption, as well as underlying compliance with CMMC Level 2, FedRAMP High, and NIST 800-171 access control families.

Azure OpenAI Security Architecture

IronCloud supports secure deployments of Azure OpenAI, including for customers operating in Azure Commercial, GCC, and GCC High environments. When using Azure OpenAI via IronCloud LLM, your data stays protected within Microsoft’s enterprise-grade security boundaries.

The architecture diagram to the left outlines how prompts, completions, storage, and finetuning are securely handled within the Azure OpenAI platform:

Key Security Controls in Azure OpenAI

-

Data Isolation: All resource-specific storage is double encrypted and isolated per deployment.

-

Customer-Controlled Storage: Assistants, Threads, and Files APIs use storage exclusively accessible and manageable by your Azure tenant.

-

Content Filtering: Prompts and completions are filtered synchronously or asynchronously depending on deployment region.

-

Finetuning Safeguards: Finetuned models are customer-exclusive, subject to safety assessment before deployment.

-

Abuse Monitoring: Microsoft applies default logging and abuse prevention. You may request modifications via the official form.

IronCloud LLM can deploy your Azure OpenAI services within your Azure tenant—ensuring all data, models, and inference calls stay inside your compliance boundary.

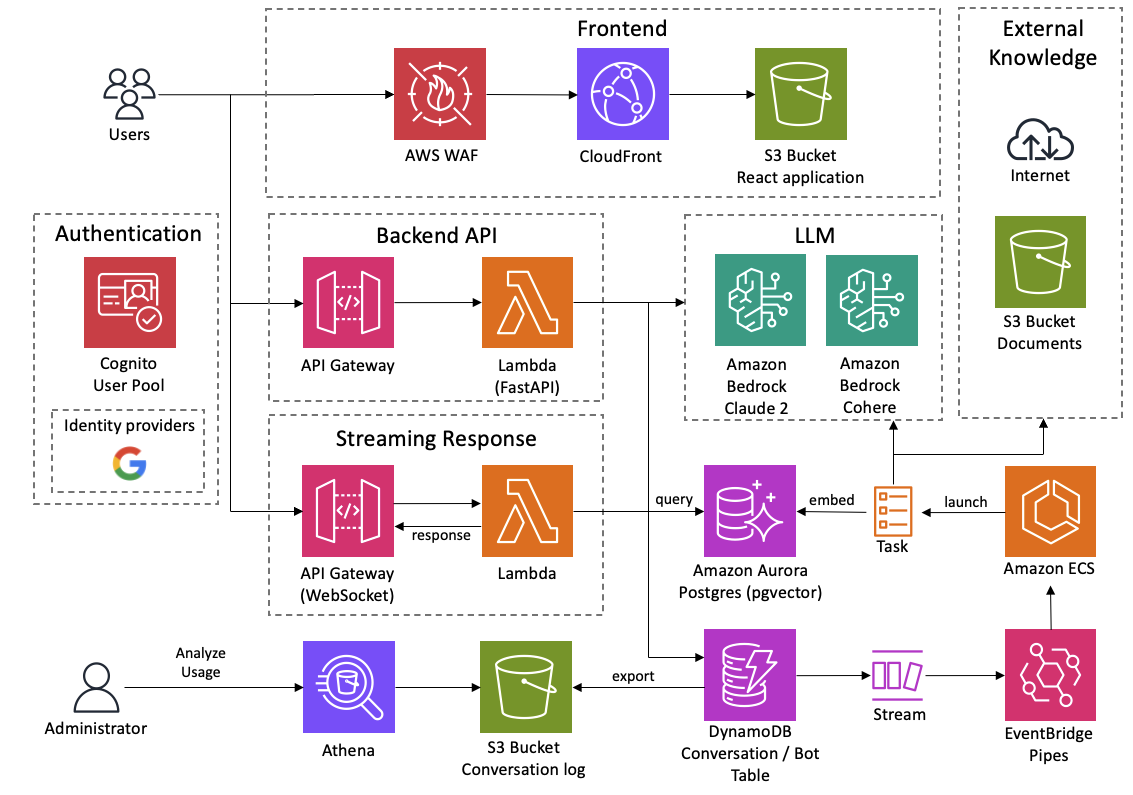

AWS Bedrock Security & Architecture Overview

IronCloud supports secure deployments of Amazon Bedrock, allowing customers to use foundation models like Claude and Titan within a private, compliant AWS environment.

All Bedrock model interactions occur within your AWS account using IAM-authenticated access and private VPC networking. Applications connect through SDKs or API Gateway to call Bedrock securely without public internet exposure. Network traffic is controlled using VPC endpoints, PrivateLink, and VPC Lattice for fine-grained access policies.

Data is encrypted in transit with TLS and at rest using AWS KMS. Prompt and completion data is never used for model training and remains isolated per customer. Models run in isolated compute environments provisioned on demand or in advance.

Fine-tuned models are stored in your S3 bucket and accessible only through IAM. Access and activity are logged through CloudTrail and monitored via CloudWatch. For multi-account organizations, Bedrock access can be centralized through VPC Lattice and proxy layers, enabling shared access with strict isolation and control.

IronCloud LLM integrates Bedrock directly into your AWS infrastructure with no external dependencies, ensuring secure model access and full compliance with internal and regulatory requirements.

![flow[1]](https://ironcloudllm.com/wp-content/uploads/2025/07/flow1.jpg)