How IronCloud Works

IronCloud runs fully self-hosted AI that connects to your internal systems, indexes your content, and makes it searchable, audible, and usable — all inside your own infrastructure.

It’s built for teams that need real results without external APIs, SaaS lock-in, or compliance risks.

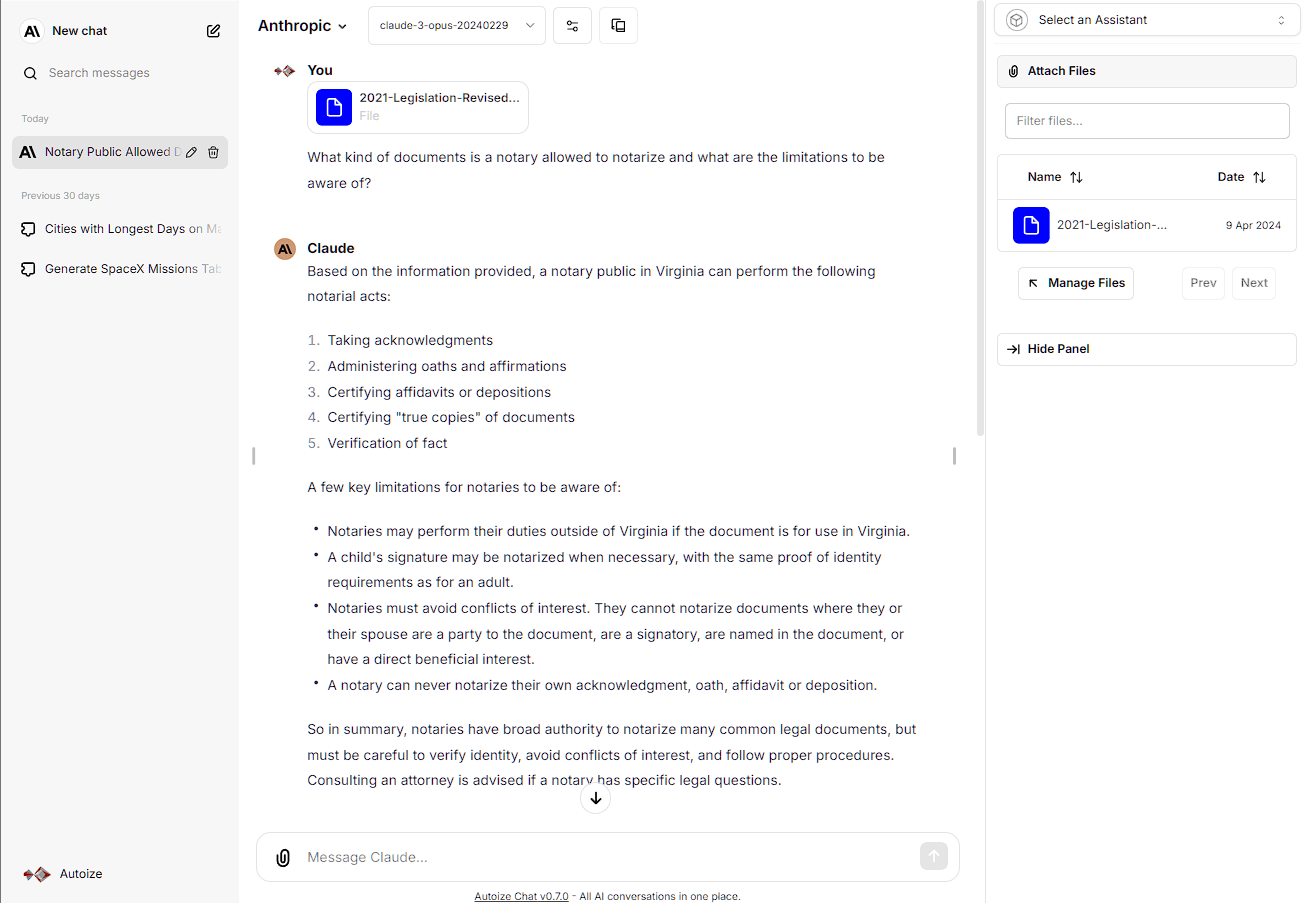

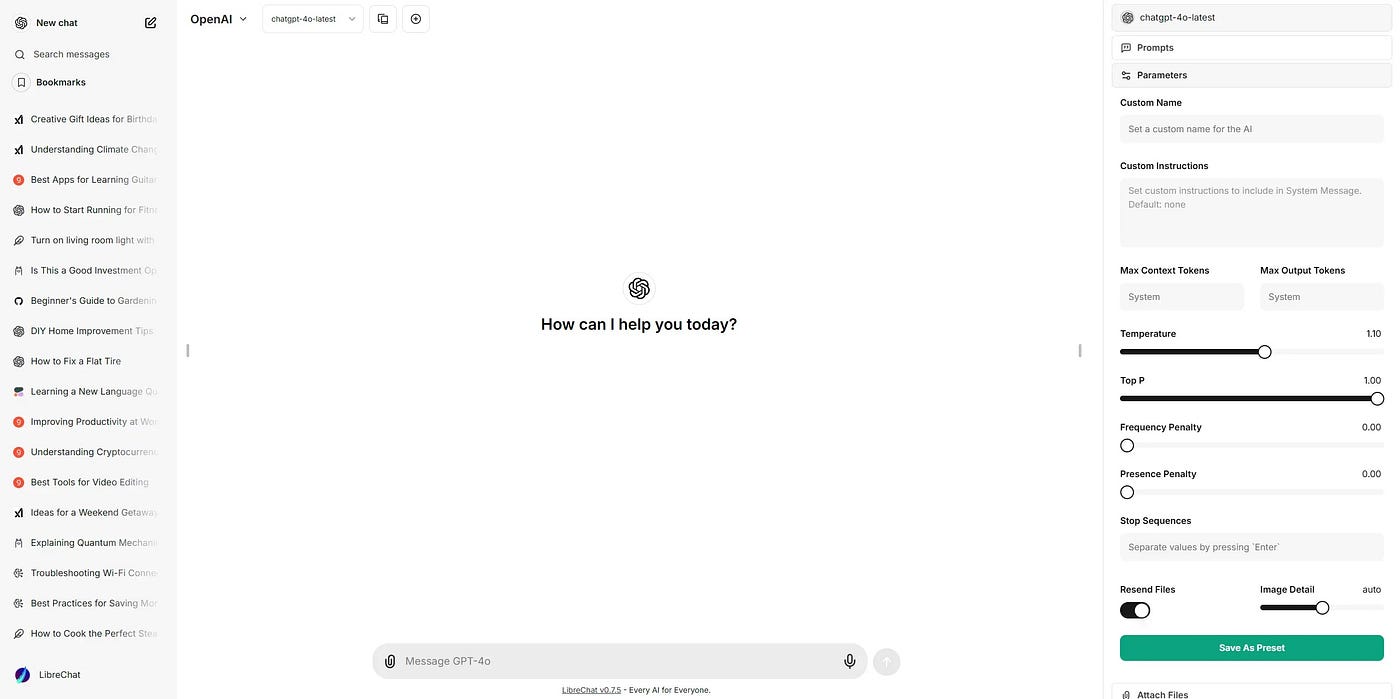

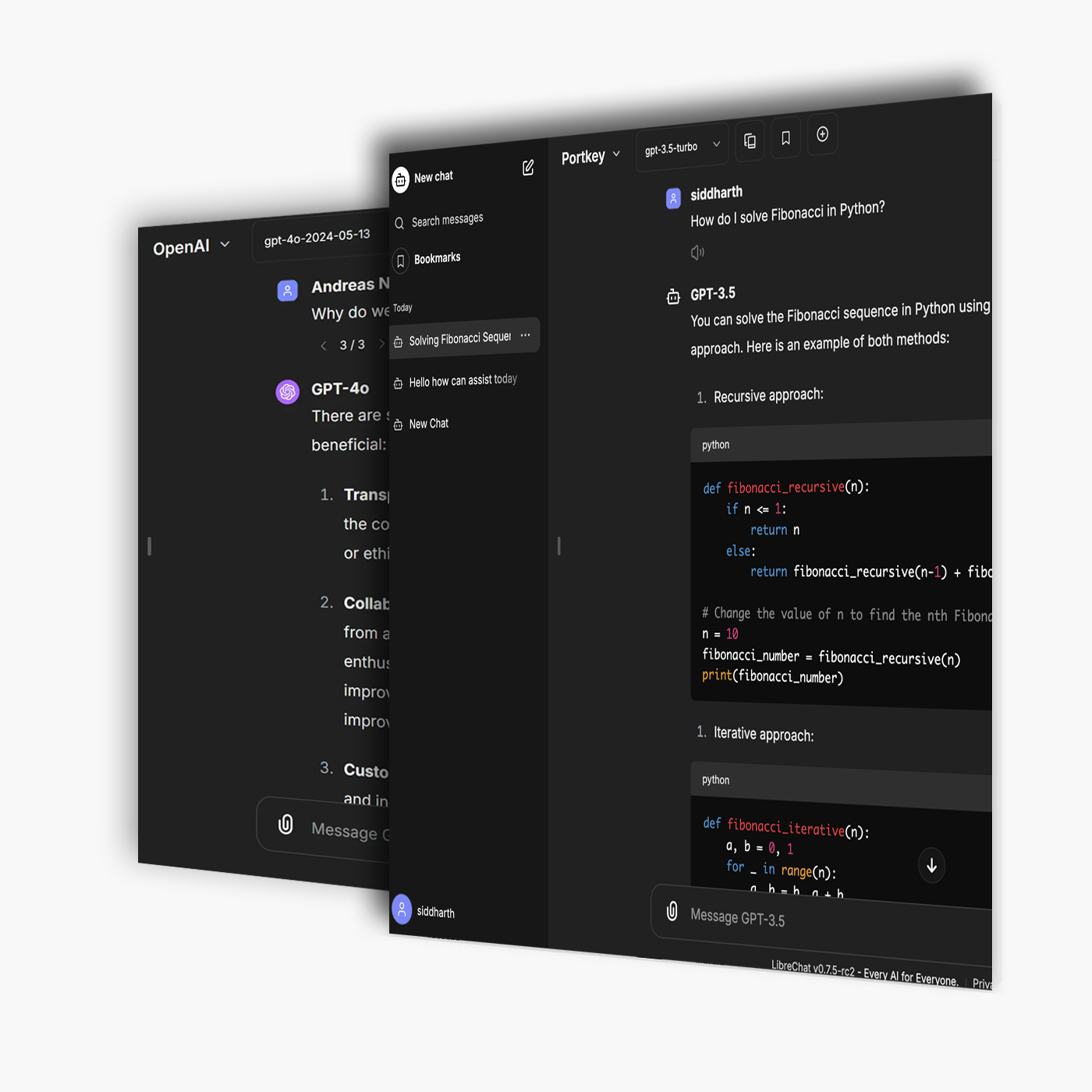

The GPT Experience — Without the SaaS

A secure, self-hosted chat interface that feels just like ChatGPT — but runs entirely on your infrastructure.

IronCloud delivers the same powerful chat interface users expect from platforms like ChatGPT — but running entirely inside your environment. The interface you see here is LibreChat: a fully self-hosted frontend that looks and feels like familiar commercial tools, while operating securely on your own infrastructure.

Users can ask natural language questions, explore documents, and generate output across multiple tasks — all with real-time responsiveness and zero external dependencies. LibreChat supports model switching, chat history, inline code rendering, and search integration out of the box.

It’s designed to feel intuitive for non-technical users while giving your internal teams access to powerful LLM-backed reasoning tools. And because it’s integrated with IronCloud’s backend, responses can be grounded in your internal data — whether that means project wikis, tickets, documents, or source code.

This isn’t a simplified chatbot. It’s a real AI interface, deployed securely, using only the models and data you authorize.

Contextual Conversations

Users can start structured chats grounded in internal data — directly within a private interface.

Inline Code + Reasoning

Responds with executable logic, document references, or task breakdowns — all locally generated.

Model Flexibility

Swap between self-hosted models securely. No cloud calls. No public API traffic.

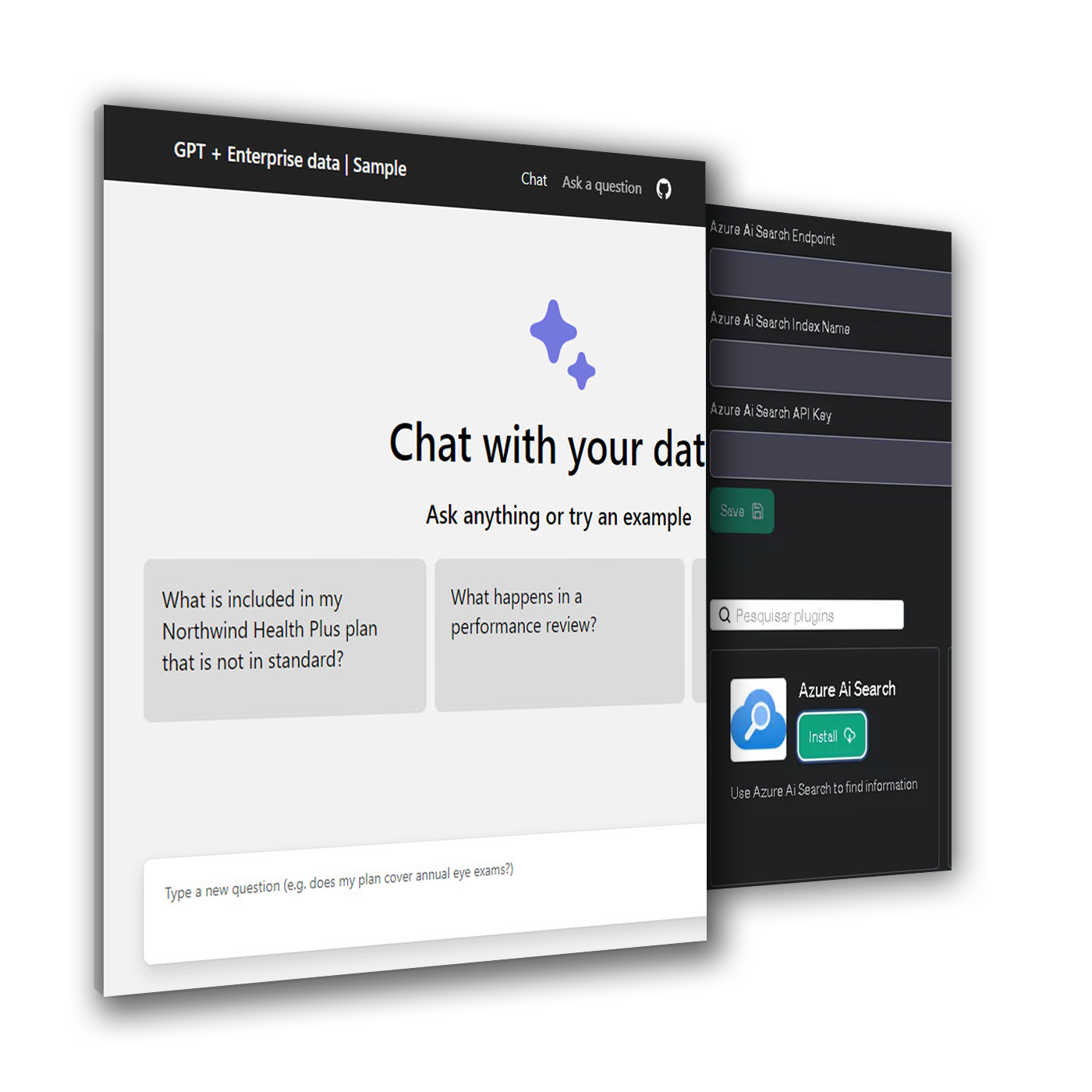

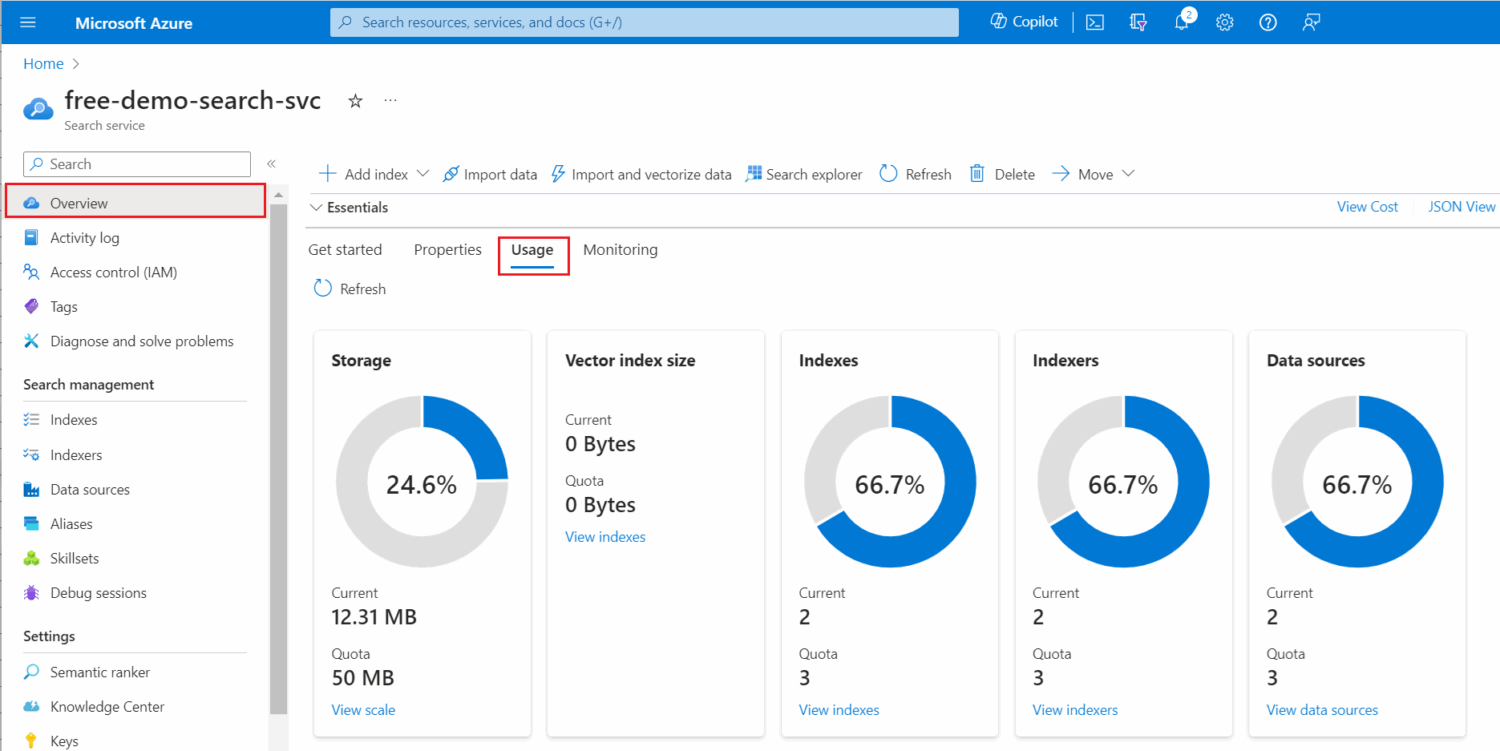

Fast, Private Search Over Your Own Data

IronCloud builds semantic indexes over your internal systems — enabling retrieval-augmented generation without exposing a single query.

Your content is only useful if you can find it. IronCloud uses secure, self-hosted indexing pipelines to turn your internal documents, tickets, wikis, and source code into a searchable knowledge base — all behind your firewall.

Powered by Azure AI Search and custom indexers, the platform builds semantic indexes that work across structured and unstructured data. Content becomes instantly accessible via natural language queries, and the results are fast, permission-aware, and traceable.

Unlike public-facing AI tools, IronCloud doesn’t send queries to the cloud or rely on external APIs. All indexing, embedding, and retrieval happens locally — so you retain full control over what’s stored, what’s searchable, and who has access.

This is the foundation of our retrieval-augmented generation (RAG) pipeline: a clean, scalable search layer that feeds your LLM with real context from your environment — not hallucinated answers from the web.

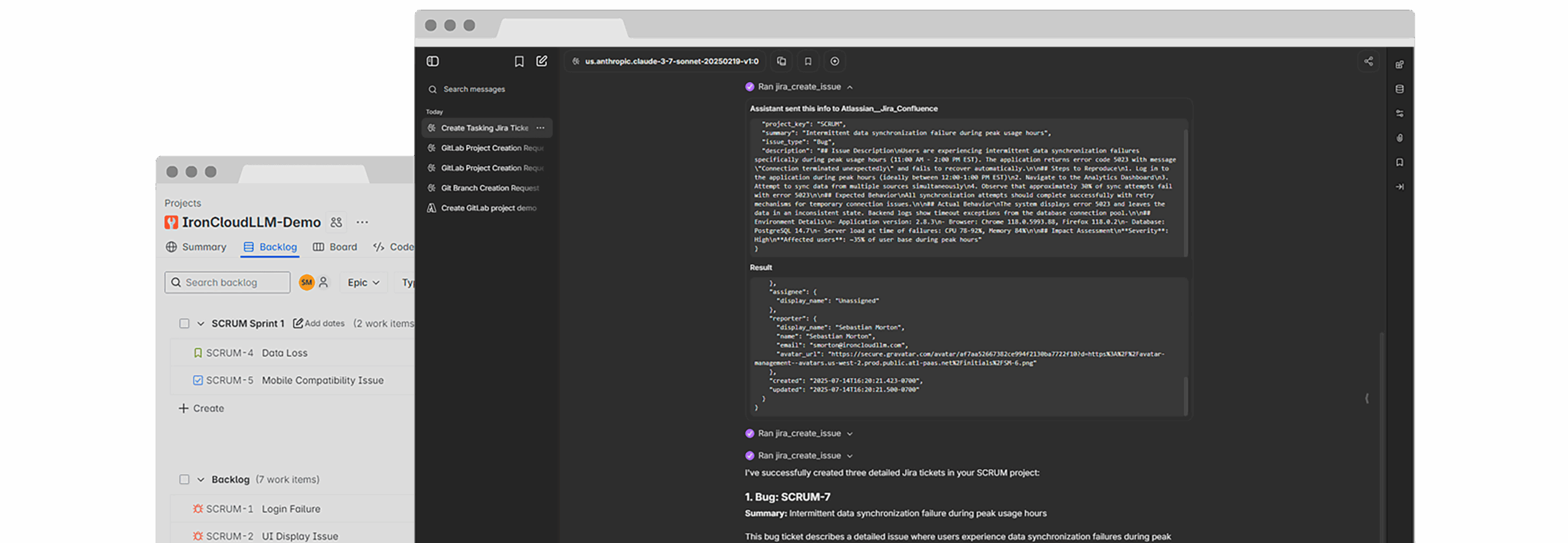

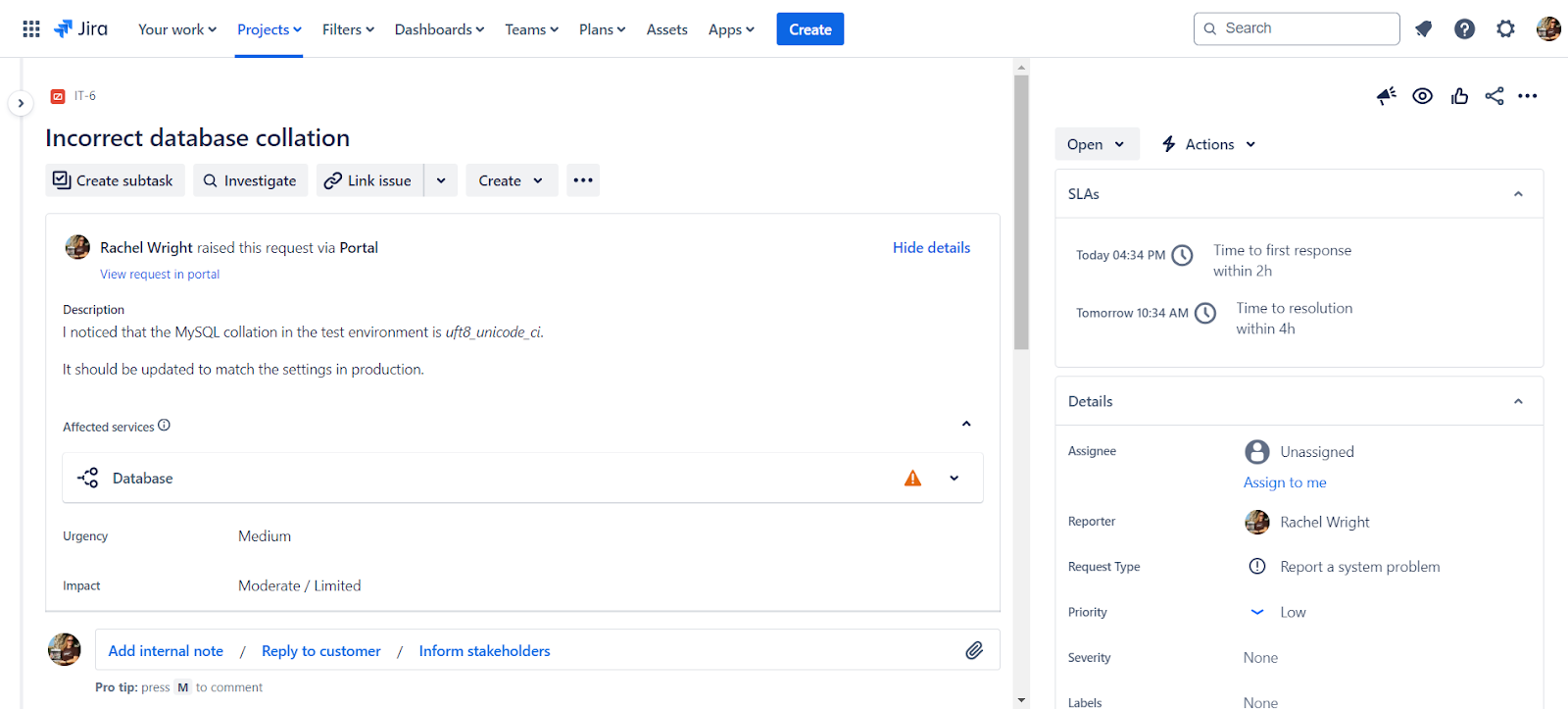

Connect to the Systems You Already Use

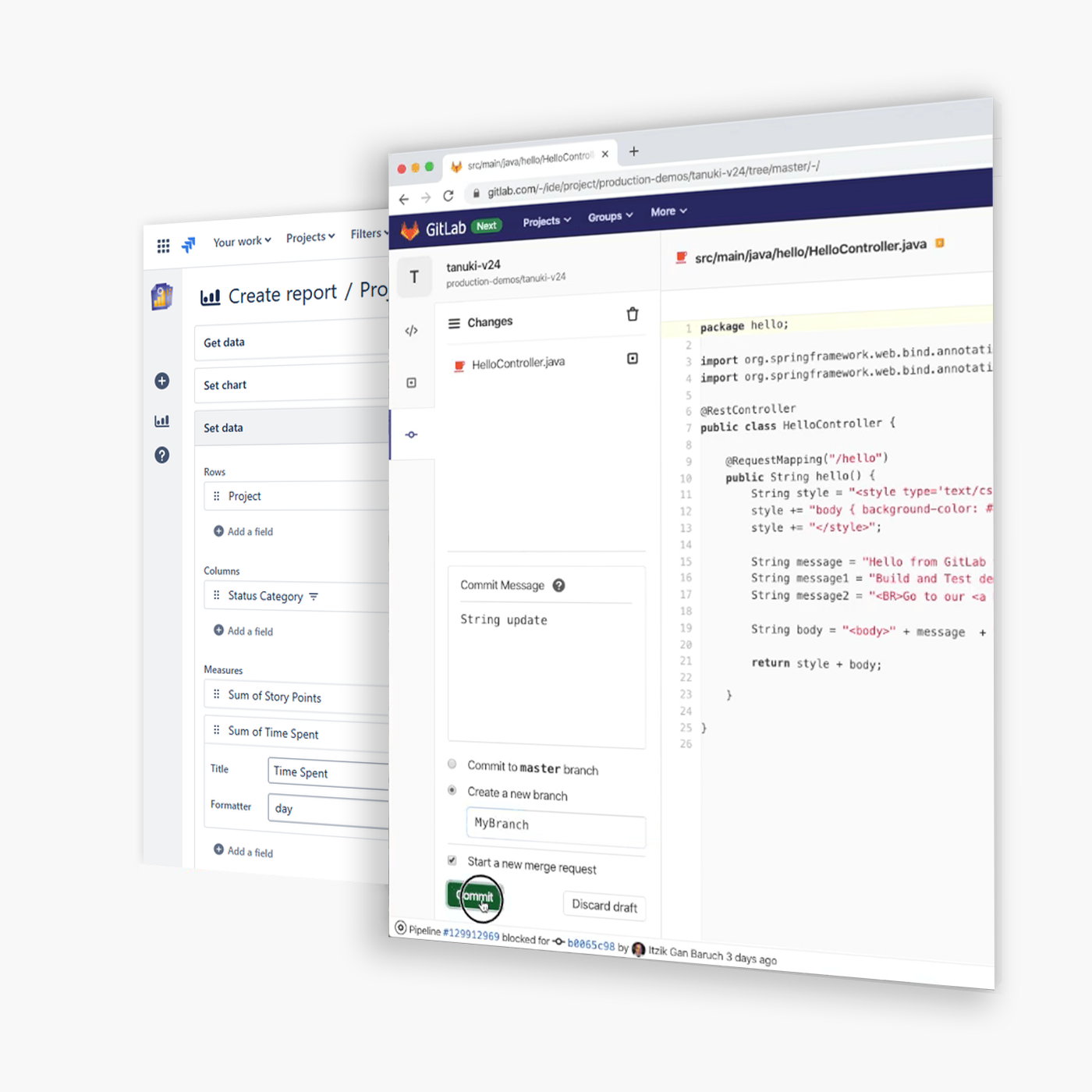

IronCloud pulls real-time data from Jira, Confluence, Monday.com, SharePoint, Git and many more — with zero plugins or vendor lock-in.

Your teams already work in tools like Jira, Confluence, SharePoint, and Git. IronCloud doesn’t try to replace them — it connects directly to them through our lightweight integration layer based on the Model Context Protocol (MCP).

This allows IronCloud to ingest data from your internal platforms securely, index that content for search, and make it available for retrieval-augmented generation and document interaction — all without breaking your existing workflows or needing third-party plugins.

Each connection honors existing access controls and permission models. No new user databases. No risky syncs. Just real-time, read-only visibility into the data your teams already rely on.

The result: your AI isn’t guessing. It’s working with context pulled directly from your actual project tickets, internal documentation, codebases, and repositories — with full control over what’s visible, searchable, and auditable.

Search Across Code and Projects

Query issues, source files, and internal documentation — all from a single private interface that understands your tools.